So that puts us down to 15.33TB actual usable capacity in the cluster.

So we are down to 46TB we now need to consider our Replication Factor (RF), so let’s say we leave it at the default of RF 3, so we need to divide our remaining capacity by our RF, in this case 3, to allow for the 2 additional copies of all data blocks. So let’s say we spec up 5 x HyperFlex Nodes each contributing 10TB RAW capacity to the cluster which gives us 50 TB RAW, but let’s allow for that 8% of Meta Data overhead (4TB) So 500 x 50GB gives us a requirement of 25TB capacity. Obviously in the real world vCPU to Physical Core ratios and vRAM requirements would also be worked out and factored in.īut let’s keep the numbers nice and simple and again not necessarily “real world” and plan for an infrastructure to support 500 VM’s each requiring 50GB of storage. I’m only going to base this example on the storage requirement, as the main point of this post is to show how the Replication Factor (RF) affects capacity and availability.

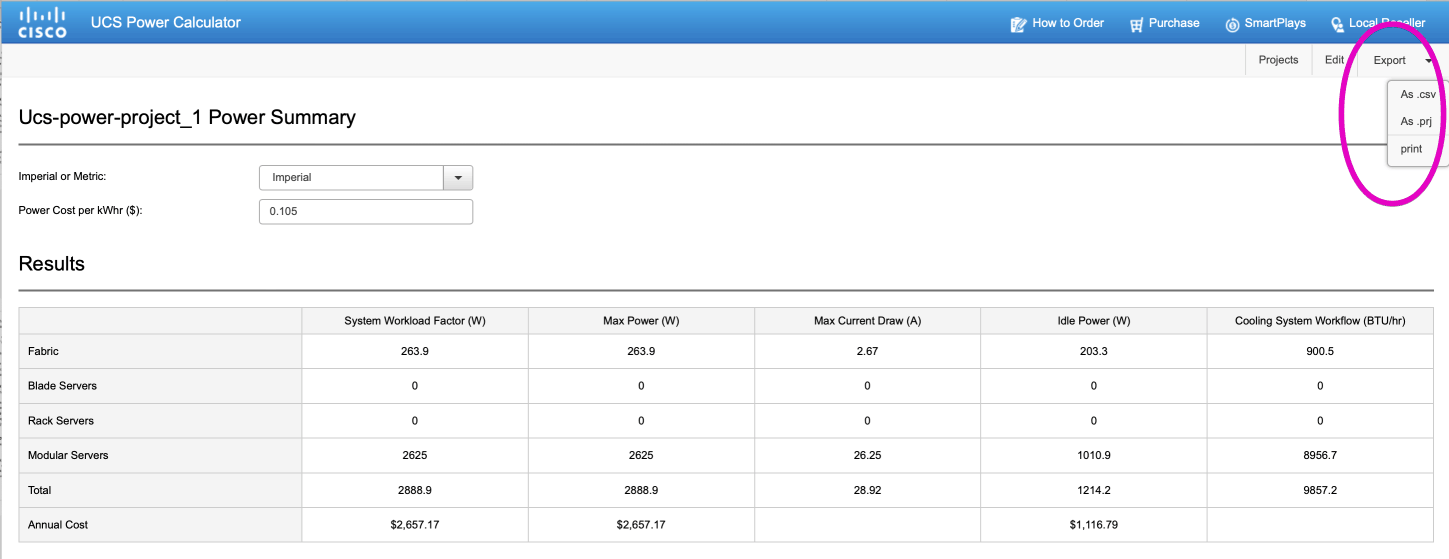

#CISCO POWER CALCULATION TOOL MANUAL#

The last figure to bear in mind is our old friend N+1, so factor in enough capacity to cope with a single node failure, planned or otherwise, while still maintaining your minimum capacity requirement.Īs in most cases, it may be clearer if I give a quick manual example. For persistent user data and general Virtual Server Infrastructure (VSI) VM’s the capacity savings are still a not to be sniffed at 20-50%. VDI VM’s will practically take up no space at all, and will give circa 95% capacity savings. Now for some good news, what the Availability Gods taketh away, HyperFlex can giveth back! remember that “always on, nothing to configure, minimal performance impacting” Deduplication and Compression I mentioned in my last post? Well it really makes great use of that remaining available capacity. *No space available for writing onto the storage device There is 8% capacity that needs to be factored in for the Meta data ENOSPC* buffer In short the more hosts and disks there are on which to stripe the data across the less likely it is that multiple failures will effect replicas of the same data. So let’s look at the two replication factors available:Īs you can no doubt determine from the above, the more nodes you have in your cluster the more available it becomes, and able to withstand multiple failure points. It is absolutely fine to have multiple clusters with different RF settings within the same UCS Domain. Availability optimised for production and capacity optimised for Test/Dev for example.

In reality you may decide to choose a different replication factor based on the cluster use case, i.e. The setting that determines this is the Replication FactorĪs the replication factor is set at cluster creation time, and cannot be changed once set, it is worth thinking about. If you size your cluster for maximum capacity obviously you will lose some availability, likewise if you optimise your cluster for availability you will not have as much usable capacity available to you. The most significant design decision to take, is whether to optimise your HyperFlex cluster for Capacity or Availability, as with most things in life there is a trade-off to be made.

#CISCO POWER CALCULATION TOOL HOW TO#

Since my post below, Cisco have released a Hyperflex profiler and sizer tool, for more info of where to get it and how to install and use it, refer to the below video by Joost van der Made.īut still feel free to read my original post below, as it will teach you the under the covers Math.Ĭalculating the usable capacity of your HyperFlex cluster under all scenarios is worth taking some time to fully understand

0 kommentar(er)

0 kommentar(er)